What is Context Engineering?

At its heart, context engineering is about feeding LLMs the right information at precisely the right moment. Tobias Lütke, in a June 2025 post, called it "the art of providing all the context for the task to be plausibly solvable by the LLM." Andrej Karpathy described it as "the delicate art and science of filling the context window with just the right information for the next step" (June 2025).

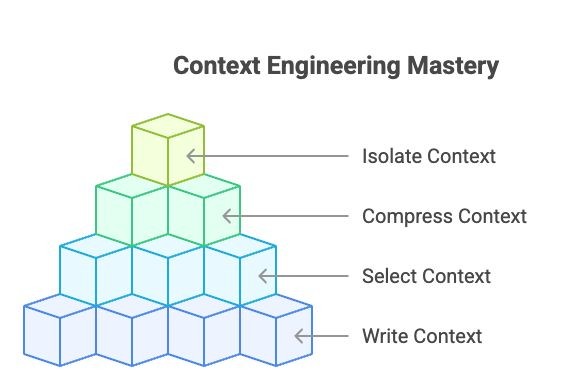

Context engineering transcends crafting a single prompt. It’s a multifaceted discipline that involves managing long-term memory, selecting critical data, compressing information for efficiency, and isolating contexts for multi-agent systems. These elements empower AI agents to reason dynamically, adapt seamlessly, and tackle complex tasks with precision.

For developers and businesses building next-generation AI systems, context engineering is the key to unlocking unparalleled performance. Whether you’re designing autonomous agents, scaling AI infrastructure, or optimizing LLM efficiency, this skill is your secret weapon.

Why Context Engineering is a Game-Changer

With LLMs driving everything from conversational bots to enterprise-grade AI solutions, the ability to manage context effectively is paramount. Here’s why context engineering is reshaping the AI landscape:

Pinpoint Accuracy: By curating precise, relevant context, LLMs can deliver responses that hit the mark every time.

Seamless Scalability: Context engineering frameworks enable systems to handle complex workflows and massive datasets effortlessly.

Unmatched Efficiency: Compressing context slashes computational costs, speeds up processing, and optimizes resources.

Collaborative Intelligence: Multi-agent systems thrive on isolated contexts, enabling coordinated and autonomous AI behavior.

To help you navigate this transformative field, we’ve curated a powerful collection of tools and frameworks, organized into four core pillars: Write Context, Select Context, Compress Context, and Isolate Context. Let’s dive into each one and explore how you can harness them to build smarter AI agents.

The Four Pillars of Context Engineering

This curated collection breaks down context engineering into four essential categories, each packed with innovative tools to elevate your AI projects. Let’s explore them.

1. Write Context: Crafting Long-Term Memory for AI Agents

Writing context is about endowing AI agents with persistent memory to ensure continuity across interactions. Long-term memory frameworks allow agents to store, retrieve, and reason over historical data, creating more intelligent and personalized experiences.

Top Tools for Writing Context:

mem0 (mem0ai): A memory management powerhouse for AI agents, featuring OpenMemory MCP for secure, local memory handling.

MemGPT (letta-ai): A stateful agent framework with advanced memory and reasoning capabilities.

Graphiti (getzep): Real-time knowledge graphs to supercharge agent memory and context awareness.

Cognee (topoteretes): Add memory to AI agents in just five lines of code.

These tools enable agents to "remember" past interactions, making them ideal for applications like customer support, personalized recommendations, and intelligent automation.

2. Select Context: Curating the Perfect Information Mix

Selecting context is about pinpointing and delivering the most relevant data to an LLM at any given moment. This process ensures agents stay focused, filtering out noise and irrelevant information.

Top Tools for Selecting Context:

MCP Servers:

MCP Frameworks:

These tools ensure LLMs receive high-quality, relevant data, paving the way for sharper decision-making.

3. Compress Context: Boosting Efficiency Without Compromise

As LLMs handle vast datasets, compressing context is essential to reduce memory usage, accelerate inference, and cut costs. Prompt and Retrieval-Augmented Generation (RAG) compression techniques are leading the way.

Top Tools for Compressing Context:

Prompt Compression:

LLMLingua (Microsoft): Compresses prompts and KV-Cache for up to 20x efficiency with minimal performance loss.

SAMMO (Microsoft): A library for structure-aware prompt optimization.

Selective_Context: Compresses LLM input to process 2x more content while saving 40% memory and GPU time.

These tools optimize LLM performance without sacrificing accuracy, making them critical for production-grade systems.

4. Isolate Context: Fueling Multi-Agent Collaboration

Isolating context is vital for multi-agent systems, where agents must operate independently or collaboratively without overlap. Multi-agent frameworks provide the infrastructure to manage complex conversations and workflows.

Top Tools for Isolating Context:

MetaGPT (FoundationAgents): A multi-agent framework for AI-driven software development.

Agno (agno-agi): A full-stack framework for building multi-agent systems with memory and reasoning.

CAMEL (camel-ai): A leading framework for scaling agent interactions.

Agent-Squad (awslabs): A flexible framework for managing multiple AI agents and complex conversations.

These frameworks empower developers to create collaborative AI systems that handle sophisticated workflows with ease.

Getting Started with Context Engineering

This curated collection of tools and frameworks is your gateway to mastering context engineering. Whether you’re a seasoned AI developer or just starting out, these resources will help you build smarter, more efficient AI agents. From memory management to multi-agent systems, the tools cover every facet of context engineering.

To begin, explore the tools listed above, experiment with them in your projects, and join the growing community of AI innovators. Share your own tools or frameworks to contribute to the collective advancement of AI!

Why Context Engineering Shapes the Future

As AI reshapes industries, context engineering is emerging as a critical skill for developers. By mastering the art of writing, selecting, compressing, and isolating context, you can unlock the full potential of LLMs and create agents that are faster, smarter, and more reliable.

Here are a few tips to excel at context engineering:

Explore Diverse Tools: Experiment with frameworks like mem0, LLMLingua, or MetaGPT to discover what suits your needs.

Scale Smartly: Leverage compression techniques to ensure your AI systems remain efficient as they grow.

Join the Community: Contribute to open-source AI projects to stay at the cutting edge of innovation.

Stay Inspired: Follow thought leaders like Tobias Lütke and Andrej Karpathy for the latest insights.

Conclusion

Context engineering isn’t just a trend—it’s the backbone of next-generation AI systems. By skillfully curating and managing context, developers can create AI agents that are intelligent, efficient, and scalable. This collection of tools and frameworks offers everything you need to dive into context engineering and build the future of AI.

Ready to transform your AI projects? Embrace context engineering, explore the tools, and join the community of innovators driving AI forward. Share your insights, collaborate, and let’s shape a smarter future together!